Secretary Perry continues to ignore the evidence on grid reliability, even his own

Late Wednesday night, the U.S. Department of Energy (DOE) released its so-called “study” on grid reliability.

Secretary Perry commissioned the report in this April memo, asking the DOE to investigate whether our electric grid’s reliability is threatened by the “erosion of critical baseload resources,” meaning coal and nuclear power plants. Perry took the unusual step of providing his own, pre-study conclusion, claiming that “baseload power is necessary to a well-functioning electric grid.”

His own report disagrees. It’s largely a backward-looking report that sometimes argues with itself, but comes, albeit grudgingly, to the same conclusion as every other recent study: the electric grid continues to operate reliably as uneconomic coal diminishes. Moreover, coal is declining because it can’t compete, and other resources are ensuring reliability at more affordable rates.

Perry seems undeterred by the evidence however, and the report’s accompanying cover letter and recommendations appear ready to double down on his pro-coal agenda. Here are three ways he tries to twist the facts in favor of dirty coal – a move that ignores more efficient, affordable, and innovative solutions and comes at a cost to Americans.

The misdirection spin

Perry’s letter accompanying the report included this nugget:

“It is apparent that in today’s competitive markets certain regulations and subsidies are having a large impact on the functioning of markets, and thereby challenging our power generation mix.”

Although Secretary Perry continues to blame “regulations and subsidies” for “challenging” the power generation mix (despite the mix becoming more, not less, diverse as more renewables come online), he would be well advised to read his own report if he’s looking for the real driver of coal retirements. The report he commissioned clearly states:

“The biggest contributor to coal and nuclear plant retirements has been the advantaged economics of natural gas-fired generation.”

Secretary Perry continues to ignore the evidence on grid reliability, even his own

Click To Tweet

This is hardly new information; extensive study has found that “decreases in natural gas prices have had a much larger impact on the profitability of conventional generators than the growth of renewable energy.” Coal is simply too costly to compete. And low natural gas prices, in addition to flat demand for electricity, are making energy more affordable.

The reliability spin

On reliability, again Perry’s letter takes one stance:

“The industry has experienced massive change in recent years, and government has failed to keep pace.”

And the report states the opposite:

Grid operators “are working hard to integrate growing levels of [renewable energy] through extensive study, deliberative planning, and careful operations and adjustments.”

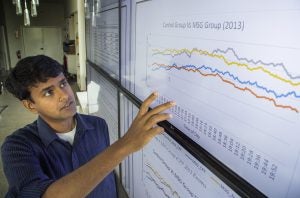

Although Perry appears unaware of the thorough performance his own study references, grid operators are required by law to ensure reliable electricity at affordable rates. And indeed, government has kept pace. As the DOE report noted, the North American Electric Reliability Corporation’s most recent annual State of Reliability analysis concluded that the electric grid was reliable in 2016. And 2015. And 2014. And 2013. And although the DOE report neglected to mention it, this same State of Reliability analysis found that reliability has been increasing.

Certainly, more can and should be done. As the DOE report mentions, increasing the use of fast, flexible resources support a healthy grid. Unfortunately for Perry, coal can’t provide what’s needed, as the DOE report notes,

“For a power plant to make money today, it must be able to ramp up and down to coincide with the variable levels of renewable generation coming online. That makes combined cycle natural gas plants profitable…but coal plants have relatively high and fixed operating costs and are relatively inflexible.”

Coal, simply put, is too slow and old to respond nimbly.

The resiliency spin

Perry also attempts to pivot from focusing on reliability to resiliency, a lesser defined term:

“Customers should know that a resilient electric grid does come with a price.”

Like everything worth having, resiliency comes at a price, but that price should be cost-effective. But coal is part of the problem, not the solution to achieving affordable, resilient, and reliable electricity. Not only do coal-fired power plants unexpectedly break down more than any other resource, they have performed poorly during extreme weather events, as his report notes:

During extreme weather events in 2014 “many coal plants could not operate due to conveyor belts and coal piles freezing.”

During extreme weather events in 2014 “many coal plants could not operate due to conveyor belts and coal piles freezing.”

“Forced outages,” meaning the instance when a power station is unavailable to produce power due to an unexpected breakdown, are higher for coal than any other resource – almost twice as high, in fact, as the next highest resource. Coal also needs twice as much scheduled maintenance, referred to as “planned outages,” as any other resource.

A terrible solution in search of a problem

When Secretary Perry originally requested the DOE report, he already knew the grid reliability answer he was looking for. Unfortunately for him, the final report – despite best efforts – only further illustrates why his pre-study pro-coal conclusion is wrong. The report’s recommendations and his letter double down on coal despite the evidence, no matter the cost to the American public; no matter the cost to human health and the environment; and no matter the cost to the well-being of the electric grid itself.

Now that the report is finally here, coal companies likely will continue complaining and seeking help for their uneconomic power plants. Meanwhile, America’s grid will continue to embrace new, innovative technology that builds a cleaner, reliable, affordable, and resilient energy system.

Be Prepared. It’s not just the Boy Scout motto, it’s also the way most smart businesses try to operate. Better to anticipate future compliance issues today and bake them into your forward planning, than to be caught flatfooted tomorrow.

Be Prepared. It’s not just the Boy Scout motto, it’s also the way most smart businesses try to operate. Better to anticipate future compliance issues today and bake them into your forward planning, than to be caught flatfooted tomorrow.